今天前端遇到一个问题,前端部署的[反向代理]](/posts/nginx-proxy/)到后端的upstream一直pending。

timeout?#

以为是后端服务压力大,来不及响应,所以更新upstream配置,加上timeout。 立竿见影,没问题了。

1

2

3

4

5

6

7

8

9

10

11

12

| location /api/ {

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

rewrite ^/api/(.*)$ /$1 break;

proxy_pass http://api_server/;

proxy_connect_timeout 5s;

proxy_send_timeout 10s;

proxy_read_timeout 10s;

}

|

一段时间后,后端又接受不到前端请求了,nginx一直报错499。

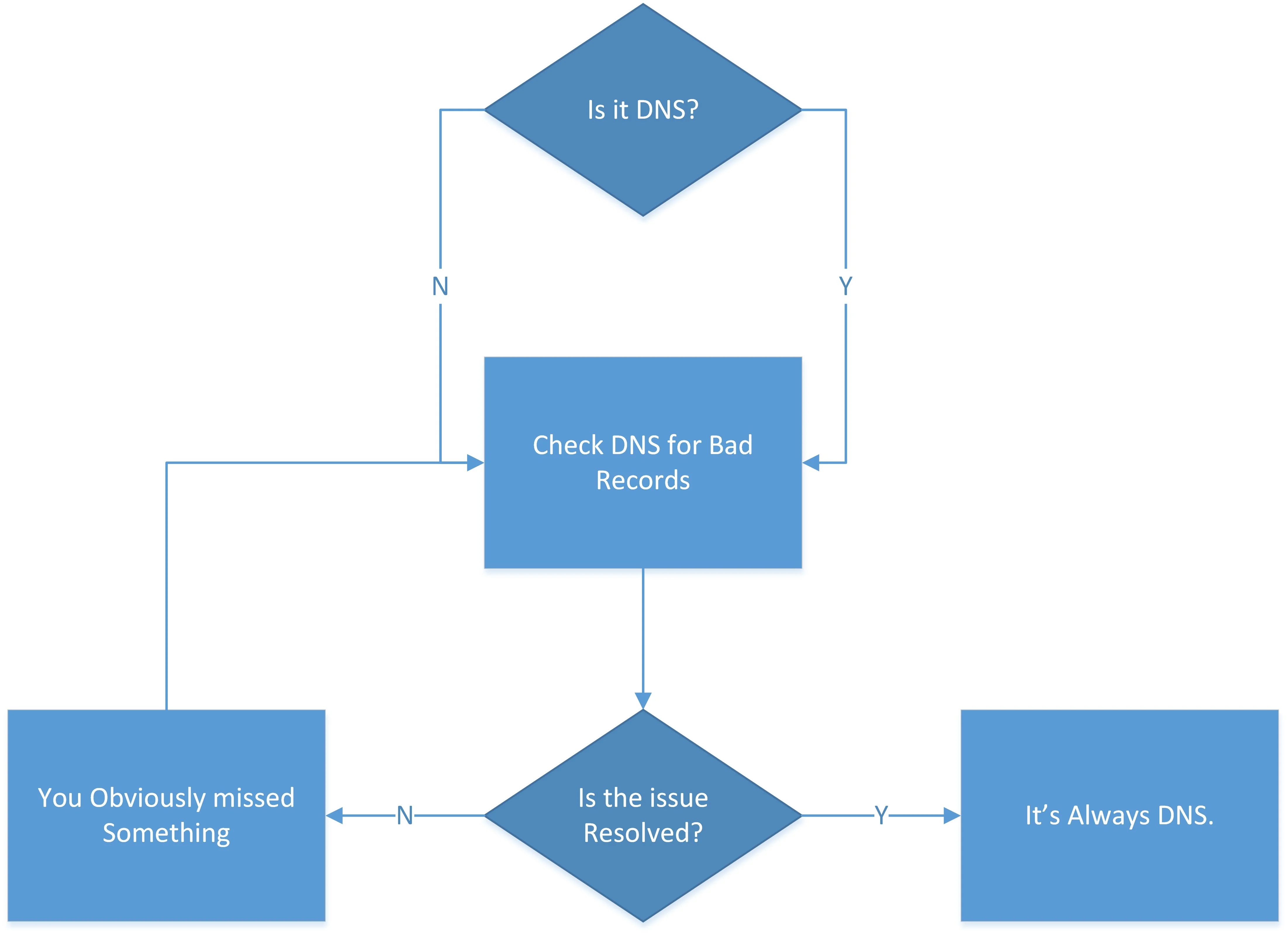

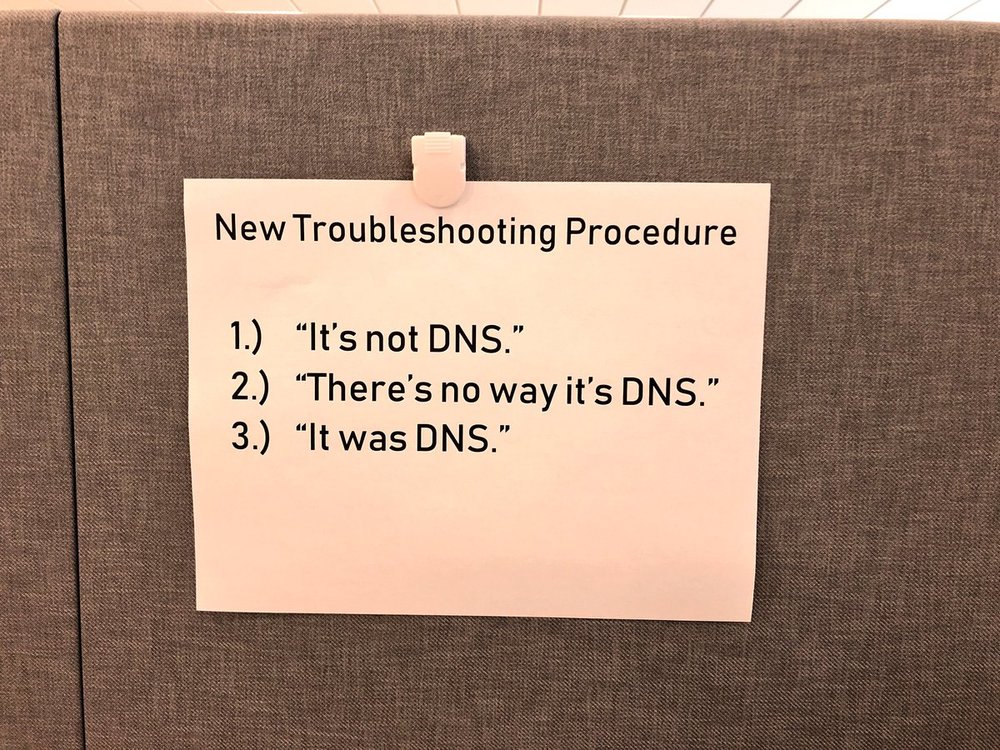

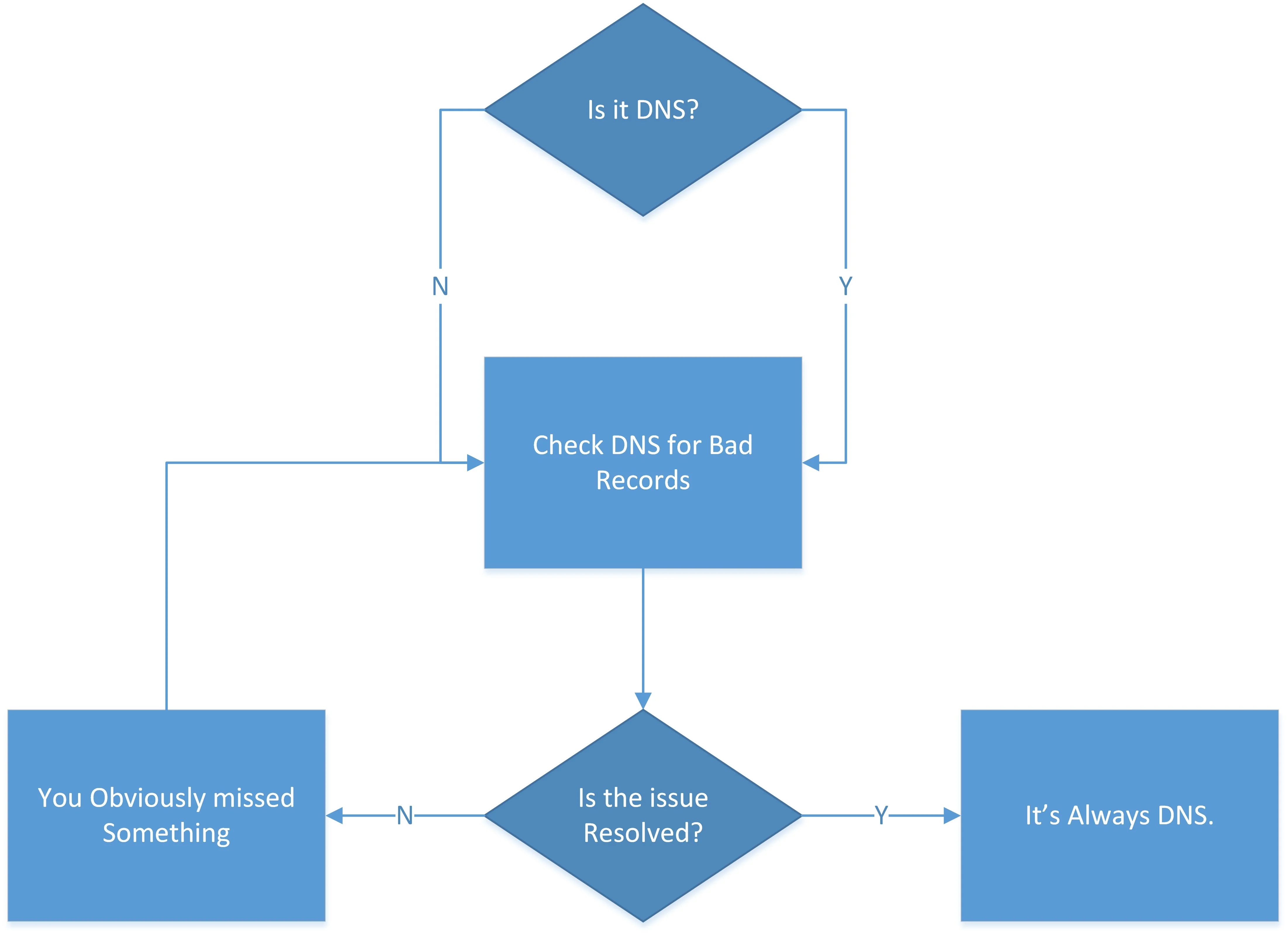

DNS !#

调查了一段时间后发现根本问题是nginx的dns cache机制的问题。

原来后端每次更新k8s后端deployment的时候,也会重建service从而导致 service的 IP发生了变化。

和后端沟通修改了后端更新流水线脚本,不再重建service 后,问题解决 :)

dubug#

force nginx to resolve DNS (of a dynamic hostname) everytime when doing proxy_pass?

1

2

3

4

5

6

7

| server {

#...

resolver 127.0.0.1;

set $backend "http://dynamic.example.com:80";

proxy_pass $backend;

#...

}

|

resolver

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| resolver 10.0.0.1;

upstream dynamic {

zone upstream_dynamic 64k;

server backend1.example.com weight=5;

server backend2.example.com:8080 fail_timeout=5s slow_start=30s;

server 192.0.2.1 max_fails=3;

server backend3.example.com resolve;

server backend4.example.com service=http resolve;

server backup1.example.com:8080 backup;

server backup2.example.com:8080 backup;

}

server {

location / {

proxy_pass http://dynamic;

health_check;

}

}

|

use enviroment (not working)#

/etc/nginx/templates/*.template

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| # build stage

FROM node:lts-alpine as build-front

ARG front

WORKDIR /app

COPY package*.json ./

RUN npm config set registry https://mirrors.my-dear-company.com/npm-ok/

RUN npm install

COPY . ./

RUN npm run build

FROM nginx:stable

COPY nginx.template.conf /etc/nginx/templates/api.conf.template

COPY --from=build-front /app/build /usr/share/nginx/html

EXPOSE 80

|